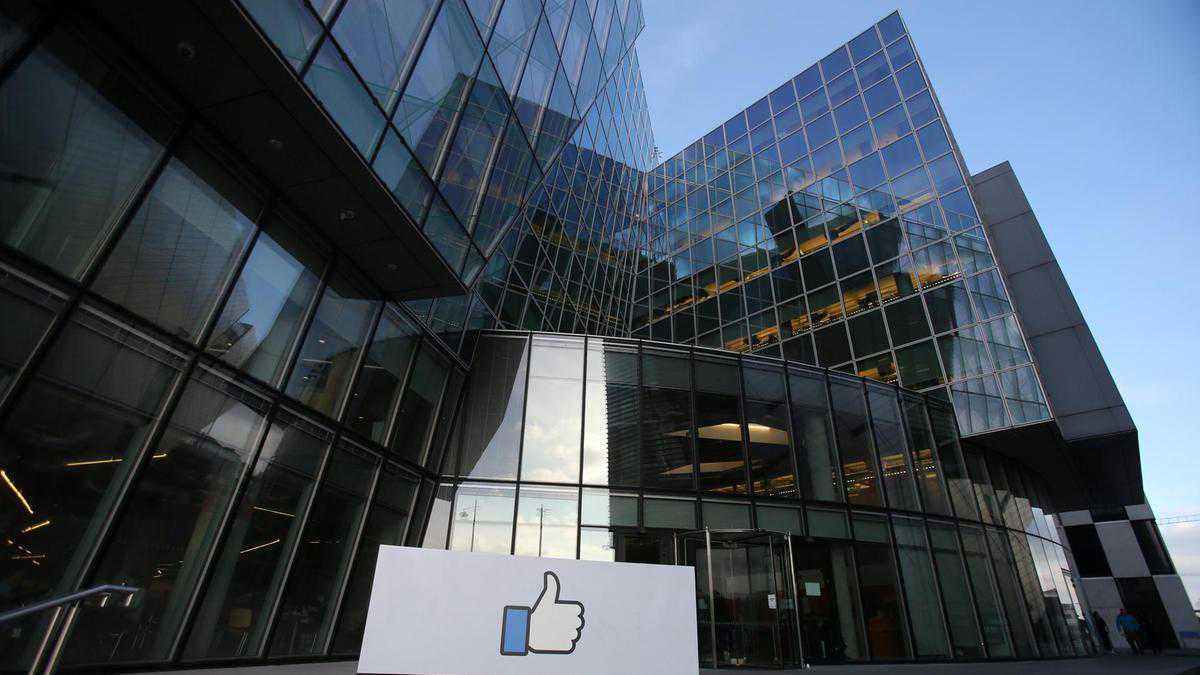

Facebook's factory’ circumstances for moderators suffering trauma

04 March, 2021

The chairman of Ireland’s justice committee has raised concerns that Facebook isn't “urgently addressing” content issues after a large number of moderators highlighted workplace problems.

James Lawless met Facebook CEO Mark Zuckerberg 3 years ago to discuss the business's make use of agency staff to screen graphic content.

The social media giant now employs 15,000 content moderators globally through third party outsourcing agents, most are used in Dublin by organizations CPL and Accenture.

Moderators have raised considerations about training and support after some personnel suffered trauma from looking at graphic content and 30 former employees found in Europe are actually suing for damages.

“There are lots of problems stemming from decisions about content quality and tone being made in factory type conditions,” Mr Lawless told The National.

“This is neither ideal for the broader platform, including user safety and confidence that the right calls are being made regarding challenging content, and also obviously not positive for the moderators themselves.

“I previously raised these issues with Facebook, including directly with Mark Zuckerberg in a meeting in Dublin in 2018.

"I am still unhappy that Facebook is addressing the problem with enough urgency and the actual fact that such moderators are usually held at a legal take away, by utilization of agency personnel, suggests the long-term purpose is not great for them either.”

In a landmark judgement in the US last May, Facebook agreed to pay $52 million to 11,250 current and former moderators to pay them for mental medical issues developed face to face.

Hundreds of moderators currently utilized by Facebook are actually also campaigning for even more support, training and better working circumstances.

Former moderators have told The National that they had to assess up to at least one 1,000 posts per shift, including disturbing images, against a huge selection of standards and were likely to have 98 per cent accuracy.

Eilish O’Gara, extremism researcher at the Henry Jackson Society think tank, said the business has “neglected” the necessity to prioritise removing graphic content.

“It is absolutely vital that violent and dangerous extremist articles is identified and taken off all online platforms,” she said.

“For too much time, Facebook and other public media platforms possess neglected their responsibility to protect the general public from harmful extremist articles.

“To effectively do as a result nowadays, those tasked with the incredibly tricky job of identifying, viewing and de-platforming such articles must be given enough training and wrap-around, psychological support to be able to carry out their do the job meaningfully, without damaging their own personal mental health.”

Facebook has been using algorithms to remove inappropriate articles where possible.

Facebook Oversight Plank investigating moderation protocols

On Tuesday, Facebook’s own Oversight Plank revealed it really is investigating how it works.

Board member Alan Rusbridger told the UK’s House of Lords communications and digital committee it'll be seeking beyond Facebook’s decisions to eliminate or retain content.

“We’re already a bit annoyed by just saying ‘take it down’ or ‘leave it up’,” he said. “What goes on if you prefer to make something not as much viral? What goes on if you prefer to put on an interstitial?

"What goes on if, without commenting on any high-profile current cases, you didn’t need to ban someone forever but wanted to put them in a ‘sin bin’ so that if indeed they misbehave again you can chuck them off?

“These are everything that the board might ask Facebook for in time. At some point we’re likely to ask to see the algorithm, I feel sure, whatever that means. Whether we’ll understand when we view it is normally a different matter.

“I think we are looking for more technology persons on the panel who can provide us independent information from Facebook. Because it is likely to be an extremely difficult thing to comprehend how this artificial intelligence works.”

The other day, Facebook revealed it will be implementing some content material moderation improvements recommended by its oversight plank in January.

Nick Clegg, the company’s vice president of global affairs, said 11 areas would be changed as a result of the board’s record.

They include more transparency around policies on health misinformation and nudity, and bettering automation detection capabilities.

Facebook previously told The National it continually opinions its working practices.

“The teams who review content help to make our platforms safer and we’re grateful for the significant work that they do,” a Facebook representative said.

“Our safety and security efforts should never be finished, so we’re definitely working to do better also to provide more support for content reviewers around the world.

“We are committed to providing support for those that review content for Facebook as we recognise that reviewing certain types of articles can often be difficult.”

Source: www.thenationalnews.com

TAG(s):